At first, it seemed a silly idea to create buttons our new puppy could press to tell us she wanted something. A few weeks later, after introducing these to our new 8-week old puppy Poppy, it seems the idea was far from silly. Poppy has started to learn that something happens when she presses these buttons. What that something is, depends on the button Poppy presses. She has already grasped that one of the three buttons I created allows her to let us know she wants to go out into the garden when the door is closed.

Sure the idea was silly and just an excuse to have some fun. I started off by prototyping with a standard Zigbee button, playing with notifications and the possible languages for text-to-speech messages. I created an amusing character for Poppy’s text-to-speech messages. After trying different languages and adapting some of the spoken words to be more playful and fun, Poppy now had a French voice, and phrases such as “Humans” were read out like “Hoomans”. At this stage of testing, my partner hadn’t vetoed the idea, so I progressed to the design stage.

Often at this stage, I feel like “Sid”, the antagonist who lived next door to “Woody and the rest of his friends”, and cannibalised toys in Toy Story, mostly because I end up trying to retrofit my crazy projects into something that looks half decent. When I fail, the projects end up in that “special box” we all have, but those that succeed live on another day. While this might make you giggle, there is a serious element to it – that user experience. In the maker space, it is not uncommon to see exposed circuits and wires, etc. For me, that is part of the fun. But it isn’t something I want my final projects to resemble, so I seek simple, intuitive, and good-looking ways to enclose my final projects. This is one of those examples.

Why does my dog need these?

She doesn’t. But with a connected smarted home, the things you can do are endless! I’ve grown up with dogs all my life but never had a puppy. With this comes the great responsibility to train her. Rather than bark or scratch the door, I wanted to teach her to use one of these buttons which could notify us that she needs something.

I guess the solution is no different to panic buttons, butler buttons, and shops or cafes where it would be helpful to get a notification and even leverage text-to-speech when a button is pressed. The options in Home Assistant are truly endless and really provide opportunity to create accessible and inclusive solutions. For example, the solution could easily integrate with lights or digital locks but also loop until something else is done like a door is opened.

As I made three of these buttons I currently have them set up to notify me when Poppy wants to go play in the garden, one near her lead for when she wants to go on a walk and the other with her food.

How to make your own pet smart button

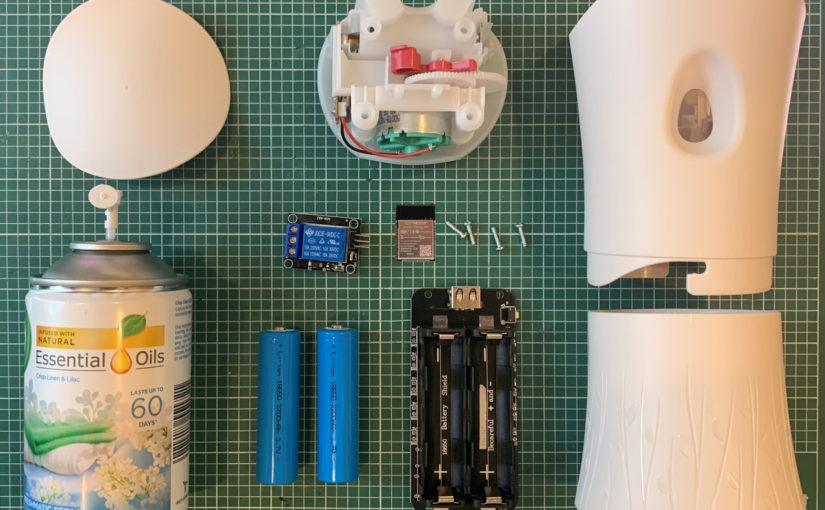

To make these buttons I brought a set of radio-frequency (RF) buttons. The pack included three nice-looking buttons each with a paw embossed on them and a receiver chime plug. I brought these hoping I could strip them and replace them with my own Zigbee button. Fortunately, the buttons were held together with a few small screws and came apart really easily. Inside was a small RF circuit which was easily removed again by removing a few small screws.

For the “smart” part, the button, I brought a Sonoff SNZB-01 Zigbee button which I removed from the Sonoff case. This was slightly trickier and needed levering out. For this, I used a plastic ply tool and with a little wriggling was able to remove the circuit from the case. As the case is used to hold the battery in place I used some Gorilla tape to secure the battery to the circuit.

Then comes the cannibalisation part, combining the new button with the case from the old button. The Sonoff button needs to be positioned in the RF-button case ensuring the button centre is placed in the centre of the case. This is so that when the case is pressed the button can also be pressed. I folded some tape over a few times to raise the Sonoff button slightly. Not over-tightening the screws on the case also provided more play for the button to be pressed.

Home Assistant integration

Home Assistant provides such great flexibility and extensibility. For this project, I created several helpers, which I use to apply the configuration to each button and to integrate each button press with a mobile notification and text-to-speech message which is played out over my Sonos speakers. My Home Assistant configuration is managed through YAML, but the same can be achieved in the UI.

For each button I created the following helpers and automation:

Trigger-based template sensor

The Zigbee button fires events when pressed. I’m using a trigger-based template binary sensor to cause the state of the binary sensor to change when the button is pressed. This provides me with a sensor that I can show in the UI and also capture insights from.

Note: I have a split configuration where my entities and integrations are defined in folders and YAML files. I wasn’t able to get this template sensor to work like other templates and had to store this in my main configuration.

Messages

Both the text-to-speech announcement and the mobile notification message are stored in the input_text helpers.

Volume and Delay

To control the volume of the text-to-speech message and the time between the next time the button can be used I created the following input_number helpers.

Automation

The following automation calls a text-to-speech script that snapshot my Sonos speakers, groups the speaker’s together, sets the volume and reads then reads out the message before reverting back.

Lovelace

Poppy has her own pretty cool dashboard where we track her health, food, and diet as well as her location from her LTE GPS collar. The buttons I made reside in their own Lovelace view in her dashboard.

As with most, if not all my automation, I leverage helpers to manage the configuration used in the automation as described above for this project. I then expose the helpers in Lovelace which makes it easier to manage and change their values. For example, the volume and text-to-speech announcement and mobile notification are all changeable in the UI via this approach for each of the three buttons I made.

Using the Fold Entity Row Card I often collapse helpers out of the way. Alongside these helpers, I’ve used the Mini Graph Card to provide insights into when each button has been interacted with over the last day. For those interested in the Yaml for the Lovelace cards, I have shared it here.

I hope this is helpful and other pet owners can see the benefit of these training buttons. If anyone needs me I’ll be training and playing with Poppy 🙌🐶🐾 and now doubt tinkering with some other fun project!